Create an RSS feed from a website with AWS

My faculty at my university provides a website for all important events, such as cancelled or postponed lectures. It is called “Schwarzes Brett (bulletin board)” and if you don’t want to end up in an empty classroom, you should read what is on there every day before you make your way to class.

For me this happened one time too often. This is why I created this project.

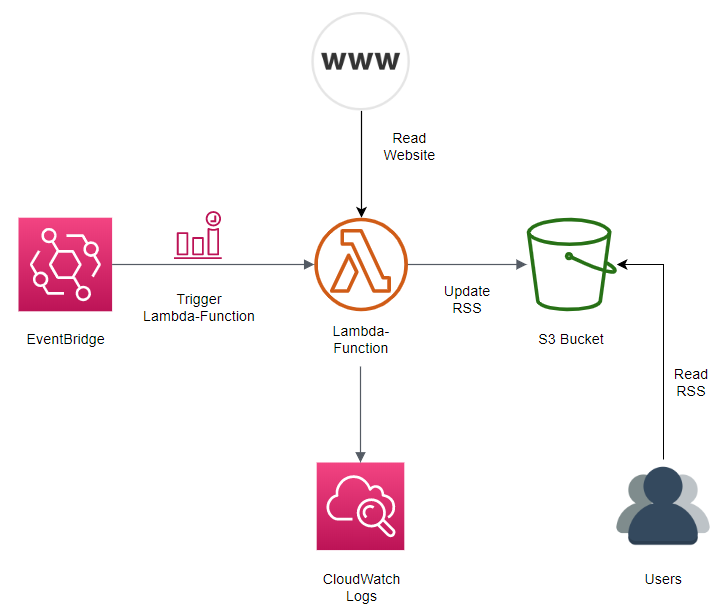

In this project I will use AWS to read the website and create an RSS feed of all new entries. The RSS reader on my smartphone will show me push notifications every time the website is updated. This way I will always be up to date and standing in an empty room is a thing of the past.

This project will use:

- AWS Lambda to read and parse the website, create the RSS feed and save the feed to AWS S3.

- AWS S3 to provide a public link to the RSS feed.

- AWS Eventbridge to trigger the lambda function which will update the RSS feed.

- AWS IAM to handle permissions of every service.

- AWS CloudFormation to create all needed resources.

The architecture of the project will look like this:

Every resource will be deployed using AWS CloudFormation and the AWS CLI.

Lambda function#

The lambda function is written in Python and will be highly specific for the website you want to parse into an RSS feed.

Used packages:

- beautifulsoup4, to read and parse the website.

- rfeed, to create the RSS feed.

- boto3, to interact with AWS resources in python.

The following code will read the website, find all articles, convert the articles to RSS items, filter out all articles that are older than 2 weeks, create the RSS feed and update the xml file in AWS S3. To make debugging easier, I added a lot of print statements which will create logs in CloudWatch Logs.

The name of the S3 Bucket here is referenced in the Environment Variables of the lambda function as os.environ['S3_BUCKET'].

This Environment Variable will be later created by CloudFormation.

# filename: website_to_rss.py

import os

from datetime import datetime, timedelta

from urllib.request import urlopen

from bs4 import BeautifulSoup

from rfeed import Item, Feed, Guid

import boto3

URL = "https://www.oth-regensburg.de/fakultaeten/informatik-und-mathematik/schwarzes-brett.html"

RSS_TITLE = "OTH Regensburg Fakultät Informatik - Schwarzes Brett"

RSS_LINK = "http://www.oth-regensburg.de"

RSS_DESCRIPTION = "RSS feed for: OTH Regensburg Fakultät Informatik - Schwarzes Brett"

RSS_AUTHOR = "Fakultät Informatik"

RSS_LANGUAGE = "de-DE"

s3_client = boto3.client("s3")

def article_to_item(article):

return Item(

title=article.h2.a.text.strip(),

link=article.h2.a["href"],

description=" ".join([x.text.strip() for x in article.find_all("p")]),

author=RSS_AUTHOR,

guid=Guid(article.h2.a["href"]),

pubDate=datetime.strptime(article.h2.span.text[:-3], r"%d.%m.%Y"),

)

def lambda_handler(event, context):

print("Start lambda function")

print(f"Start: Reading URL: {URL}")

soup = BeautifulSoup(urlopen(URL).read(), "html.parser")

print("Finished: Reading URL")

articles = soup.find_all("div", class_="article")

print(f"Found {len(articles)} articles")

print("Start: Parsing articles")

items = []

twoWeeksAgo = datetime.now() - timedelta(days=14)

for article in articles:

rssItem = article_to_item(article)

# only show articles that are less than 2 weeks old

if rssItem.pubDate > twoWeeksAgo:

items.append(rssItem)

print("Finished: Parsing articles")

print(f"There are {len(items)} RSS-Feed items which are less than 2 weeks old")

print("Create Feed")

feed = Feed(

title=RSS_TITLE,

link=RSS_LINK,

description=RSS_DESCRIPTION,

language=RSS_LANGUAGE,

lastBuildDate=datetime.now(),

items=items,

)

print("Write temporary file: rss.xml")

with open("/tmp/rss.xml", mode="w", encoding="utf-8") as xml:

xml.write(feed.rss())

print(f"Upload file to S3: {os.environ['S3_BUCKET']}")

response = s3_client.upload_file("/tmp/rss.xml", os.environ['S3_BUCKET'], "oth/rss.xml")

print(f"S3 Response: {response}")

print("End lambda function")

if __name__ == "__main__":

lambda_handler({"job_name": "test"}, "")

CloudFormation#

To use CloudFormation, we have to provide a template file with all the resources we want to provision.

Parameters#

First we will create a Parameter for the S3 bucket name and one for the path to the RSS file in the S3 bucket.

Parameters:

S3BucketName:

Description: >-

S3 bucket to store the xml file for the RSS feed.

Type: String

MinLength: '3'

MaxLength: '63'

XMLPath:

Description: >-

The path to the RSS xml file in the S3 Bucket.

Type: String

MinLength: '5'

MaxLength: '63'

Default: '/oth/rss.xml'

The S3BucketName and the XMLPath is referenced in the Resources section by the S3 Bucket we want to create.

Resources#

The S3 Bucket will be the public endpoint for everyone how wants to read the RSS feed.

This is why we set the AccessControl to PublicRead.

To make files public in the bucket we also have to provide a BucketPolicy.

We allow everyone to get access to arn:aws:s3:::<RSSBucket>/oth/rss.xml, but not to any other file.

Resources:

RSSBucket:

Type: 'AWS::S3::Bucket'

Properties:

BucketName: !Ref S3BucketName

AccessControl: PublicRead

BucketPolicy:

Type: AWS::S3::BucketPolicy

Properties:

PolicyDocument:

Id: MyPolicy

Version: 2012-10-17

Statement:

- Sid: PublicReadForGetBucketObjects

Effect: Allow

Principal: '*'

Action: 's3:GetObject'

Resource: !Join

- ''

- - 'arn:aws:s3:::'

- !Ref RSSBucket

- !Ref XMLPath

Bucket: !Ref RSSBucket

Next we have to give the lambda function, which we will create in the next part, the permissions to write logs to CloudWatch Logs, put objects in the S3 Bucket and read the python code from another S3 Bucket.

We create a Role with the AWSLambdaBasicExecutionRole policy attached.

This role can be assumed by the lambda function to put logs into CloudWatch Logs.

To allow the lambda function to write to the S3 bucket, we created, we will add a InlinePolicy to the LambdaRole.

The lambda function also needs permission to read the python code it executes from somewhere.

Here I created a S3 Bucket website-to-rss-lambda where we later will upload the python code as source.zip.

LambdaRole:

Type: 'AWS::IAM::Role'

Properties:

RoleName: lambda-rss-role

AssumeRolePolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Principal:

Service: lambda.amazonaws.com

Action: 'sts:AssumeRole'

ManagedPolicyArns:

- arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole

LambdaInlinePolicyForS3:

Type: 'AWS::IAM::Policy'

Properties:

PolicyName: lambda-access-s3

PolicyDocument:

Version: 2012-10-17

Statement:

- Sid: AllowPutForAllObjects

Effect: Allow

Action:

- 's3:PutObject'

Resource: !Join

- ''

- - 'arn:aws:s3:::'

- !Ref RSSBucket

- /*

- Sid: AllowGetCode

Effect: Allow

Action:

- 's3:GetObject'

Resource: arn:aws:s3:::website-to-rss-lambda/source.zip

Roles:

- Ref: LambdaRole

Now we create the lambda function. Here we can control the name of the function that is executed, the memory size, the runtime and other things. We also specify where the code is located and the role, to give the lambda function all the permissions it needs.

The lambda function needs to know the S3 Bucket where we want to upload the xml file.

For this we use the Environment Variable S3_Bucket.

WebsiteToRSSFunction:

Type: AWS::Lambda::Function

Properties:

Description: Read website and update RSS feed

FunctionName: website-to-rss-function

Handler: website_to_rss.lambda_handler

MemorySize: 128

Role: !GetAtt "LambdaRole.Arn"

Runtime: python3.8

Timeout: 5

Code:

S3Bucket: website-to-rss-lambda

S3Key: source.zip

Environment:

Variables:

S3_BUCKET: !Ref S3BucketName

The last thing we need to specify is how and when the lambda function will be triggered to read the website and update the RSS feed.

We create an Event Rule to trigger the lambda function in the Targets property every 10 minutes.

To allow this rule to trigger the lambda function, we will grant the TriggerLambdaRule the Permission to execute InvokeFunction on the lambda function WebsiteToRSSFunction.

TriggerLambdaRule:

Type: AWS::Events::Rule

Properties:

Description: Trigger the lambda function every 10 min to update the RSS feed

ScheduleExpression: "rate(10 minutes)"

State: ENABLED

Targets:

-

Arn: !GetAtt "WebsiteToRSSFunction.Arn"

Id: id1

PermissionForEventsToInvokeLambda:

Type: AWS::Lambda::Permission

Properties:

FunctionName:

Ref: "WebsiteToRSSFunction"

Action: "lambda:InvokeFunction"

Principal: "events.amazonaws.com"

SourceArn: !GetAtt "TriggerLambdaRule.Arn"

Outputs#

After the stack is created, we want to know the URL for the RSS reader.

The Outputs section in CloudFormation can be used to create and output this value.

To build the TargetURL we use the URL of the S3 Bucket and the path of the xml file.

Outputs:

TargetURL:

Description: The URL of this RSS feed

Value: !Join

- ''

- - !GetAtt RSSBucket.DomainName

- !Ref XMLPath

Template#

All together to template file cf-template.yaml will look like this:

AWSTemplateFormatVersion: 2010-09-09

Parameters:

S3BucketName:

Description: >-

S3 bucket to store the xml file for the RSS feed.

Type: String

MinLength: '3'

MaxLength: '63'

XMLPath:

Description: >-

The path to the RSS xml file in the S3 Bucket.

Type: String

MinLength: '5'

MaxLength: '63'

Default: '/oth/rss.xml'

Resources:

RSSBucket:

Type: 'AWS::S3::Bucket'

Properties:

BucketName: !Ref S3BucketName

AccessControl: PublicRead

BucketPolicy:

Type: AWS::S3::BucketPolicy

Properties:

PolicyDocument:

Id: MyPolicy

Version: 2012-10-17

Statement:

- Sid: PublicReadForGetBucketObjects

Effect: Allow

Principal: '*'

Action: 's3:GetObject'

Resource: !Join

- ''

- - 'arn:aws:s3:::'

- !Ref RSSBucket

- !Ref XMLPath

Bucket: !Ref RSSBucket

LambdaRole:

Type: 'AWS::IAM::Role'

Properties:

RoleName: lambda-rss-role

AssumeRolePolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Principal:

Service: lambda.amazonaws.com

Action: 'sts:AssumeRole'

ManagedPolicyArns:

- arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole

LambdaInlinePolicyForS3:

Type: 'AWS::IAM::Policy'

Properties:

PolicyName: lambda-access-s3

PolicyDocument:

Version: 2012-10-17

Statement:

- Sid: AllowPutForAllObjects

Effect: Allow

Action:

- 's3:PutObject'

Resource: !Join

- ''

- - 'arn:aws:s3:::'

- !Ref RSSBucket

- /*

- Sid: AllowGetCode

Effect: Allow

Action:

- 's3:GetObject'

Resource: arn:aws:s3:::website-to-rss-lambda/source.zip

Roles:

- Ref: LambdaRole

WebsiteToRSSFunction:

Type: AWS::Lambda::Function

Properties:

Description: Read website and update RSS feed

FunctionName: website-to-rss-function

Handler: website_to_rss.lambda_handler

MemorySize: 128

Role: !GetAtt "LambdaRole.Arn"

Runtime: python3.8

Timeout: 5

Code:

S3Bucket: website-to-rss-lambda

S3Key: source.zip

Environment:

Variables:

S3_BUCKET: !Ref S3BucketName

TriggerLambdaRule:

Type: AWS::Events::Rule

Properties:

Description: Trigger the lambda function every 10 min to update the RSS feed

ScheduleExpression: "rate(10 minutes)"

State: ENABLED

Targets:

-

Arn: !GetAtt "WebsiteToRSSFunction.Arn"

Id: id1

PermissionForEventsToInvokeLambda:

Type: AWS::Lambda::Permission

Properties:

FunctionName:

Ref: "WebsiteToRSSFunction"

Action: "lambda:InvokeFunction"

Principal: "events.amazonaws.com"

SourceArn: !GetAtt "TriggerLambdaRule.Arn"

Outputs:

TargetURL:

Description: The URL of this RSS feed

Value: !Join

- ''

- - !GetAtt RSSBucket.DomainName

- !Ref XMLPath

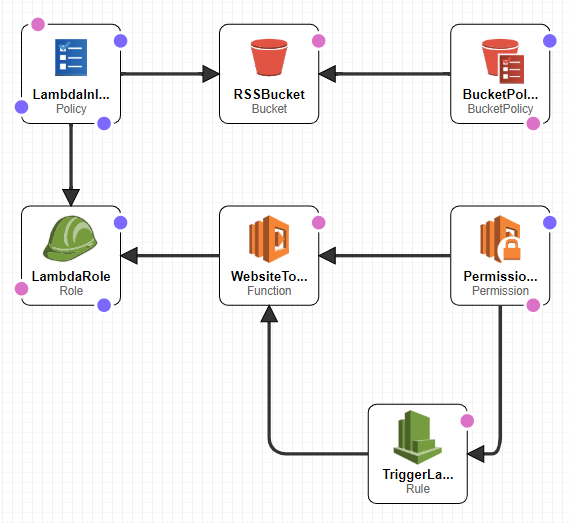

You can check your template for errors in CloudFormation Designer. There the template will look like this:

AWS CLI#

To create this CloudFormation Stack we first have to bundle the python code and all dependencies into a zip file, which we can upload to S3.

First we need a file called requirements.txt in the root folder of the project:

beautifulsoup4

rfeed

boto3

The folder structure should look like this:

root

|- cf-template.yaml

|- requirements.txt

|- source/

|- website_to_rss.py

Install the python dependencies to the source folder and zip the contents of the folder:

pip3 install -r requirements.txt -t source/

cd source

zip -r source.zip *

mv source.zip ..

cd ..

Upload the zip file to the S3 Bucket that is referenced in the cf-template.yaml for the lambda code:

aws s3 cp source.zip s3://website-to-rss-lambda/

Now everything is ready to create the CloudFormation Stack:

--template-file: provide the name of the template-file.--stack-name: name the CloudFormation Stack.--capabilities: allow the command to create IAM resources withCAPABILITY_NAMED_IAM.--parameter-overrides: set the parameters for the template. HereS3BucketName=website-to-rss-feed.

aws cloudformation deploy --template-file cf-template.yaml --stack-name website-to-rss-stack --capabilities CAPABILITY_NAMED_IAM --parameter-overrides S3BucketName=website-to-rss-feed

To get the TargetURL which we have defined in the Outputs section, we will use aws cloudformation describe-stacks.

aws cloudformation describe-stacks --stack-name website-to-rss-stack

Note that, the xml file will only be available after the first trigger of the lambda function, which is 10 minutes after the stack is created.

Whenever you need to only update the lambda function code, bundle the source folder again, upload the zip file and update the lambda function:

pip3 install -r requirements.txt -t source/

cd source

zip -r source.zip *

mv source.zip ..

cd ..

aws s3 cp source.zip s3://website-to-rss-lambda/

aws lambda update-function-code --function-name website-to-rss-function --s3-bucket website-to-rss-lambda --s3-key source.zip

If we want to delete the stack with all the created resources, we can use aws cloudformation delete-stack.

As you can only delete empty S3 Buckets, we have to delete everything in the bucket first.

aws s3 rm s3://website-to-rss-feed --recursive

aws cloudformation delete-stack --stack-name website-to-rss-stack

CloudWatch Logs#

After 10 minutes you will see your first logs in CloudWatch Logs:

In your RSS reader you can put the domain from the Outputs section or use Route 53 to create an DNS entry to point to this URL like I did: